Main Idea

On entering your story on our website, the app generates the viewable VR content including real life characters(images), objects in proper locations - scenes(background), coupled with a background sound based on the characters, actions and mood on the Story plot.

Challenges

Integrating and mapping all the components to each other: Cloud, Machine Learning, VR and Web Development especially since its our first hackathon for most of us and none of us had any experience with prior AWS and most of us had no prior VR experience and how we learnt everything on the fly at the Hackathon and implemented it end-to-end within one and half days was a tough job, done well, with extreme dedication.

Interface

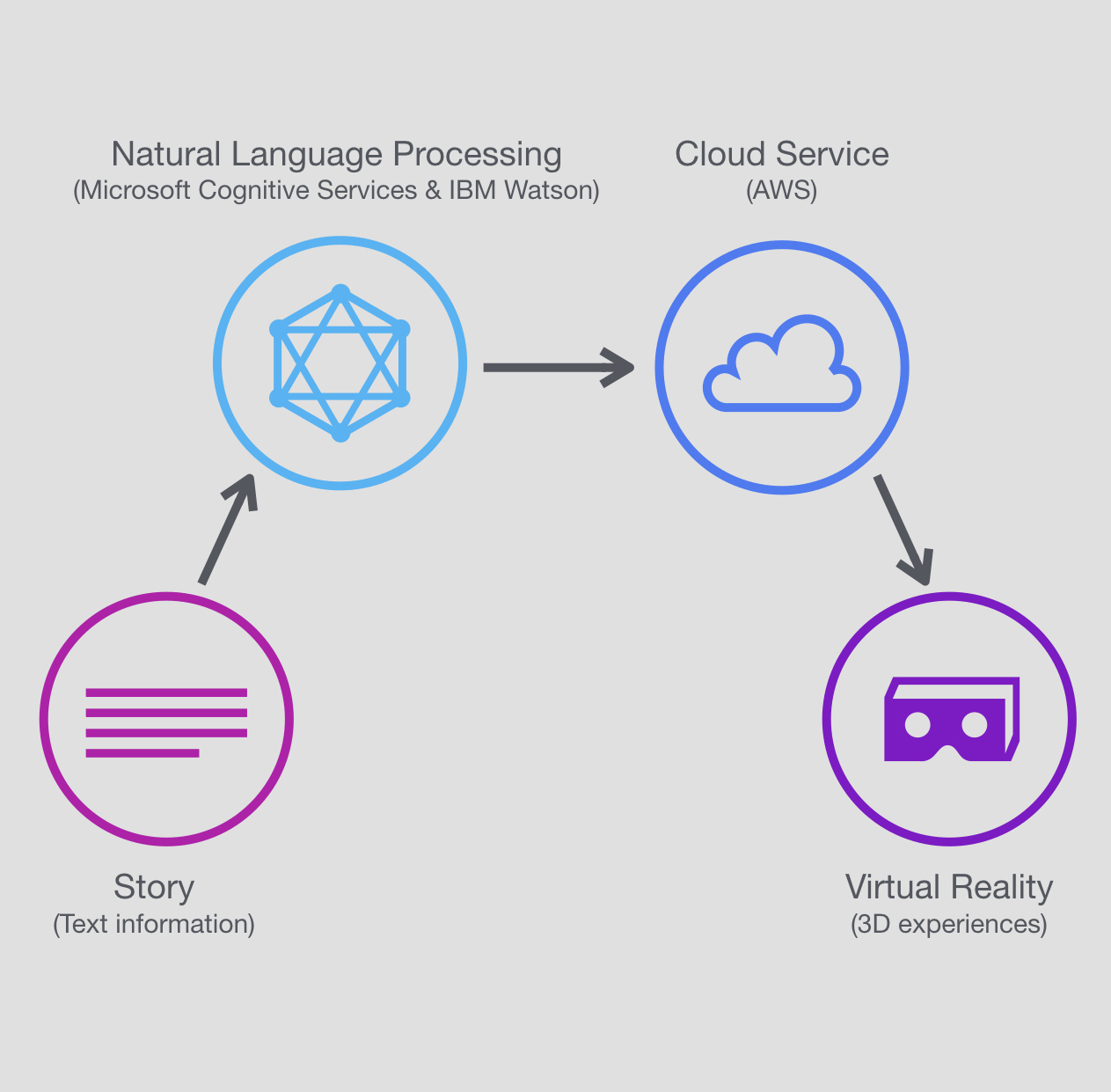

We have built a website to enter your own story (as plain text). As you click on submit, we analyze and figure out the main objects, their descriptions, the background and the overall mood of the story using machine learning and natural language processing. We used Machine learning APIs of Microsoft Cognitive Services and the IBM Watson Tone Analyzer. We combine the outputs of the APIs into a single JSON file, which is then stored on the Cloud (Amazon Web Services). The file is picked up by Unity and analyzed to pull up assets and related animations based on the objects and background sounds based on the mood of the plot. The objects are placed relative to each other with the appropriate background and a background music is played real time. This entire experience is viewable on Google Cardboard. We typically chose Cardboard as it is one of the cheapest VR device available with the best experience, hence more users would get access to the app.

Begin your story here...

Use Cases

- Can be used by educational institutes heavily, to engage students in the learning experience, can be used by parents (best when they are away), engaging kids in a great learning experience etc. Best used for Entertainment purposes.

- Can be used to help children with learning disabilities (dyslexia, autism) to read stories and experience content.

- Can be used by Writers, to visualize their plots to see how it would be like to have a movie out of it. Also to engage more users, to their writing skills through this brilliant experience.

- Can be used by Directors to visualize plots and do cost estimates based on assets and locations and also hire the best actors for the movies.

- Can be used by book lovers who think movies made, donot do justice to their amazing books.